Marin Vlastelica

Hi, I am Marin, a PhD student at the Max Planck Institute for Intelligent Systems in Tuebingen, Germany as part of the International Max Planck Research School for Intelligent Systems. My thesis advisory committee consists of Georg Martius, Peter Dayan, Michael Muehlebach and Aamir Ahmad, with my primary supervisor being Georg Martius.

I possess a masters degree in computer science with a machine learning focus from the Karlsruhe Institute of Technology, Karlsruhe, Germany and a bachelors degree in computer science from the University of Zagreb, Croatia.

In 2017 I was fortunate enough to have received a research fellowship with the Blue Brain Project in Geneva, Switzerland, where I came into contact with neuroscience. In 2021 I spent some time at Amazon as an applied scientist intern doing work on variational inference, reinforcement learning and NLP in collaboration with Patrick Ernst and Gyuri Szarvas. I spent part of 2022 at DeepMind hosted by Kimberly Stachenfeld in Peter Battaglia's group working on diffusion generative modelling for building better optimizers in close collaboration with Arnaud Doucet.

Regarding research, I have a broad interest in sequential decision making (RL etc.), representation learning, generative modelling and implicit layers. I am actively looking for collaborators! If you are interested, you can reach me at mvlastelica at tue dot mpg dot de.

In my free time I enjoy playing guitar, reading opinionated books on various topics, and all sorts of sport activities.

Research

Diverse Offline Imitation via Fenchel Duality

Leveraging duality for skill extraction

Marin Vlastelica, Pavel Kolev, Jin Cheng, Georg Martius

European Workshop on Reinforcement Learning 2023, 2023

paper /

We show that Fenchel duality can be utilized to maximize a diversity objective subject to f-divergence constraints.

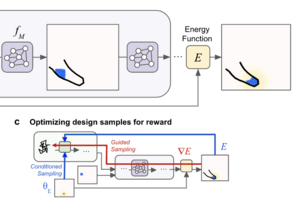

Diffusion Generative Inverse Design

Building better optimizers by guided diffusion

Marin Vlastelica, Tatiana Lopez-Guevara, Kelsey Allen, Peter Battaglia, Arnaud Doucet, Kimberly Stachenfeld

ICML workshop on Structured Probabilistic Inference and Generative Modeling, 2023

paper /

We propose an improved sampling procedure for a diffusion generative model for approximate sampling from a target distribution that is defined by an energy (cost) function of designs in a complex simulation environment.

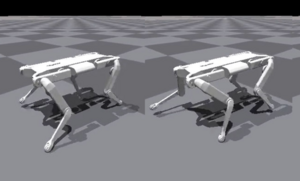

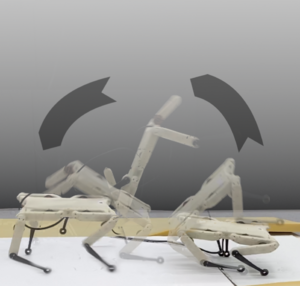

Learning Agile Skills via Adversarial Imitation of Rough Partial Demonstrations

Wasserstein adversarial learning of 'bad' demonstrations

Chenhao Li, Marin Vlastelica, Sebastian Blaes, Jonas Frey, Felix Grimmiger, Georg Martius

CoRL (best paper nominee), 2022

paper /

In this work we proposed a method for imitating rough demonstrations by a robot quadruped based on Wasserstein adversarial learning of an imitation reward. We trained a policy via domain randomization and successfully transferred it to the real system, where to robot was able to reproduce agile motions.

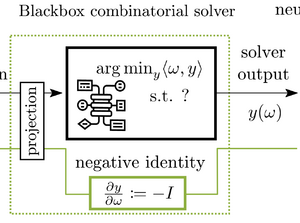

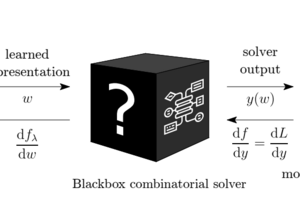

Gradient Backpropagation Through Combinatorial Algorithms: Identity with Projection Works

No need for a solver call on the backward pass (sometimes)

Subham Sekhar Sahoo*, Anselm Paulus*, Marin Vlastelica, Vít Musil, Volodymyr Kuleshov, Georg Martius

ICLR, 2022

paper /

As a continuation of the blackbox-backprop line of work, we introduce a simple modification to the algorith, namely treating the solver as an identity mapping in the computation graph on the backward pass.

This, coupled with projections that avoid degenerate cases, works comparably well as blackbox-backprop.

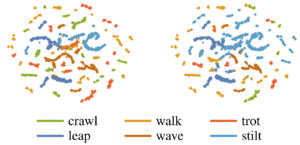

Versatile Skill Control via Self-supervised Adversarial Imitation of Unlabeled Mixed Motions

Extracting meaningful behaviors by adversarial learning.

Chenhao Li, Sebastian Blaes, Pavel Kolev, Marin Vlastelica, Jonas Frey, Georg Martius

RSS, 2022

Adversarial learning to facilitate unsupervised skill discovery resulting in a controllable skill set by a latent variable with skills that are useful for solving the downstream task.

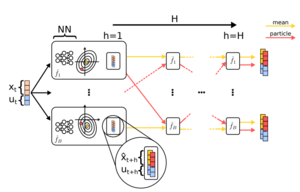

Risk-Averse Zero-Order Trajectory Optimization

How can we account for uncertainty in zero-order trajectory optimizers?

Marin Vlastelica*, Sebastian Blaes*, Cristina Pinneri, Georg Martius

CoRL, 2021

paper /

In this work we address the problem of efficient uncertainty estimation in zero-order trajectory optimization via the Cross Entropy Method (CEM). Moreover, we show that it is essential that we can distinguish between epistemic and aleatoric uncertainty in order to avoid so-called “risky” behaviour.

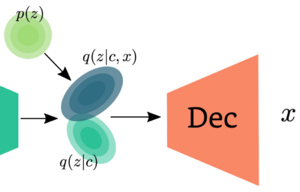

Taming Continuous Posteriors for Latent Variational Dialogue Policies

Making continuous latents for task-oriented dialogue great again

Marin Vlastelica, Patrick Ernst, Gyuri Szarvas

AAAI, 2021

Latent action reinforcement learning for task-oriented dialogue has seen success at benchmarks such as MultiWOZ. Categorical latents have been argued to be the best choice. We show that with continuous latents and reformulation of the ELBO objective and the reinforcmenet learning stage, we can achieve state-of-the-art performance on MultiWOZ.

Neuro-Algorithmic Policies Enable Fast Combinatorial Generalization

Introducing neuro-algorithmic policy architecture.

Marin Vlastelica, Michal Rolinek, Georg Martius

ICML, 2021

paper /

As a continuation of the blackbox-differentiation line of work, we propose to use time-dependent shortest-path solvers in order to enhance generalization capabilities of neural network policies. With imposing a prior on the underlying goal-conditioned MDP structure, we are able to extract well-performing policies through imitation learning that utilize blackbox solvers for receding horizon planning at execution time. Again, this comes with absolutely no sacrifices to the optimality of the solver used.

Optimizing Rank-based Metrics via Blackbox Differentiation

Ranking as a blackbox combinatorial problem.

Michal Rolinek*, Vit Musil*, Anselm Paulus, Marin Vlastelica, Claudio Michaelis, Georg Martius

CVPR, 2020

paper / article / code /

As another continuation of the blackbox differentiation line of work, we show that we can cast the ranking problem as a blackbox solver that satisfies the conditions for efficient gradient calculation, therefore enabling us to optimize rank-based metrics by simply using efficient implementations of sorting algorithms instead of learning a differentiable sort operation. We apply this insight to optimizing mean average precision and recall in object detection and retrieval tasks, where we achieve comparable results to state-of-the-art at the time.

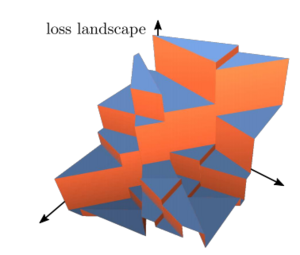

Differentiation of Blackbox Combinatorial Solvers

Can we embed combinatorial solvers in neural architectures?

Marin Vlastelica*, Anselm Paulus*, Vit Musil, Georg Martius, Michal Rolinek

ICLR, 2019

paper / article / code /

Problems that are inherently combinatorial still remain a hinderance for classical deep learning methods. Traditional methods that try to do gradient propagation through combinatorial solvers rely on sample-based estimates or solver relaxations. We show that for a specific class of solver, we are able to efficiently compute gradients of an implicit piecewise-linear interpolation of the objective. This allows us to achieve unprecedented generalization performance on representation learning tasks with combinatorial flavor.

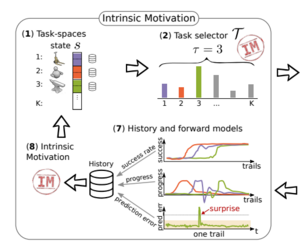

Control What You Can: Intrinsically Motivated Task-Planning Agent

Extracting planning graphs by intrinsic motivation.

Sebastian Blaes, Marin Vlastelica, Jia-Jie Zhu, Georg Martius

NeurIPS, 2019

paper / article /

In this work we propose a hierarchical reinforcement learning algorithm that is able to construct planning graphs while managing to use computational resources efficiently, guided by intrinsic motivation in form of prediction error and measure of task improvement.

Reinforcement Learning with Liquid State Machines

Can we use spiking neural networks for continuous control?

Marin Vlastelica

ICANN, 2017

paper /

In this work we propose a liquid state machine approach to reinforcement learning of continuous motor control. The liquid state machine approach with smart spectral radius initialization has shown to extract useful features for motor control which can be used with classical RL algorithms.

KIT at MediaEval 2015-Evaluating Visual Cues for Affective Impact of Movies Task

Evolutionary methods for minimal addition chain exponentiation

Marin Vlastelica*, Sergey Hayrapetyan*, Makarand Tapaswi*, Reiner Stiefelhagen

MediaEval, 2015

paper /

In this work we looked at what kind of features we can use for prediction of video valence, violence and sentiment.

Side Projects

FOX (FlOws in JAX)

Normalizing Flows in JAX

Marin Vlastelica

2021

Developing a Python package with standard normalizing flow implementations that is based on JAX and Flax.

Decentralized Energy Exchange

Based on Ethereum blockchain

Marin Vlastelica, Georgi Urumov, Magnus Goedde

2017

As part of hackathon, we have noticed that the future of energy distribution networks are with small producers (via solar cells for example). The missing component is an exchange that makes it possible for producers to exchange energy for value, which we tried to make possible. With this, we have won 1st place on a Microsoft-organized hackathon Germany-wide.